LTGC: Long-tail Recognition via Leveraging LLMs-driven Generated Content

(CVPR 2024, 🏅Oral)

* Equal contribution; † Corresponding author

Abstract

Long-tail recognition is challenging because it requires the model to learn good representations from tail categories and address imbalances across all categories. In this paper, we propose a novel generative and fine-tuning framework, LTGC, to handle long-tail recognition via leveraging generated content. Firstly, inspired by the rich implicit knowledge in large-scale models (e.g., large language models, LLMs), LTGC leverages the power of these models to parse and reason over the original tail data to produce diverse tail-class content. We then propose several novel designs for LTGC to ensure the quality of the generated data and to efficiently fine-tune the model using both the generated and original data. The visualization demonstrates the effectiveness of the generation module in LTGC, which produces accurate and diverse tail data. Additionally, the experimental results demonstrate that our LTGC outperforms existing state-of-the-art methods on popular long-tailed benchmarks.

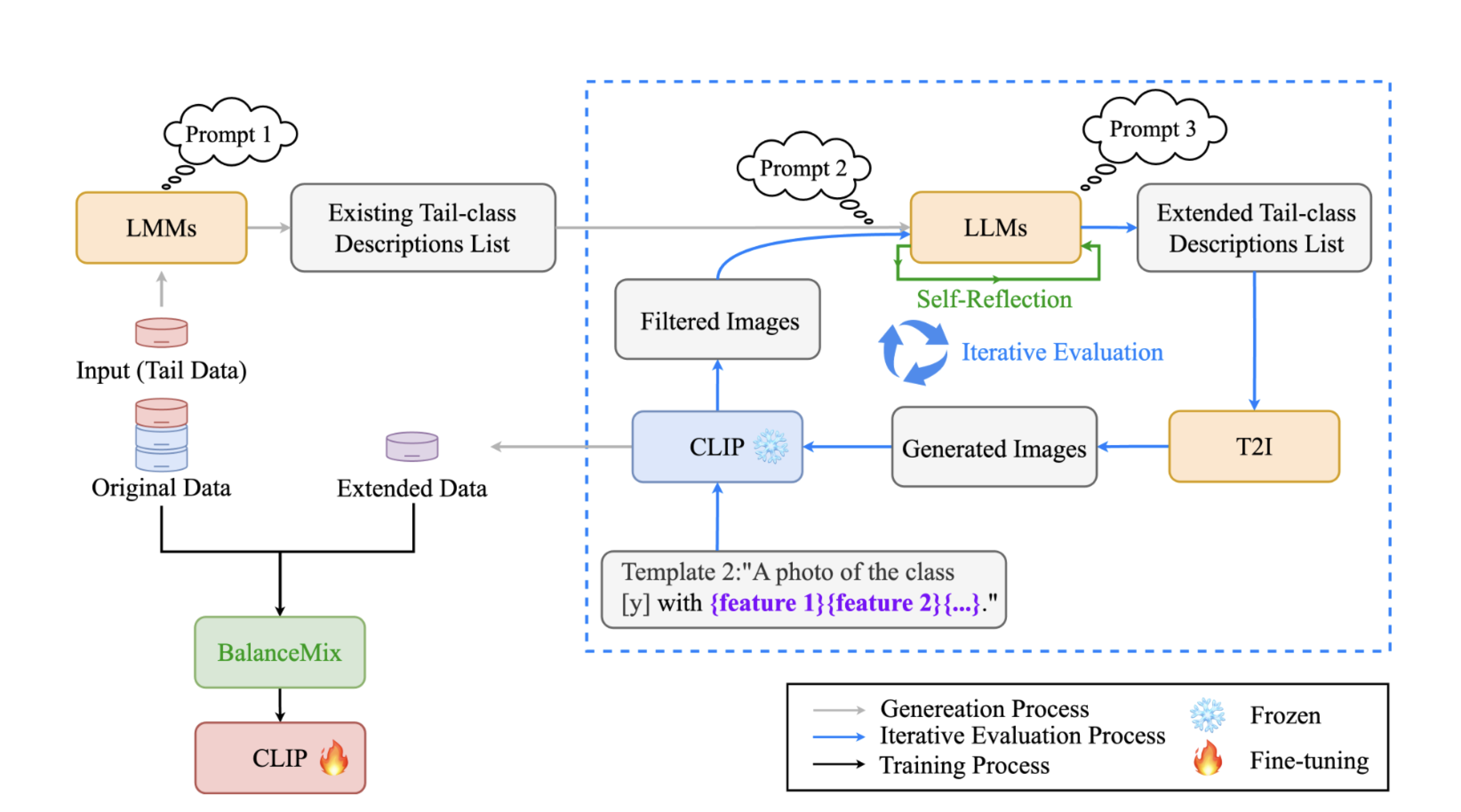

Overall framework of LTGC. LTGC first employs LMMs to analyze the existing tail data to obtain the existing tail-class descriptions list. Then it inputs the list into LLMs to analyze the absent features of the tail classes and employs the T2I model to generate diverse images. Moreover, our designed self-reflection and iterative evaluation modules ensure the diversity and quality of the tail data. Finally, LTGC employs the BalanceMix module to fine-tune the CLIP’s visual encoder with the extended and original data.

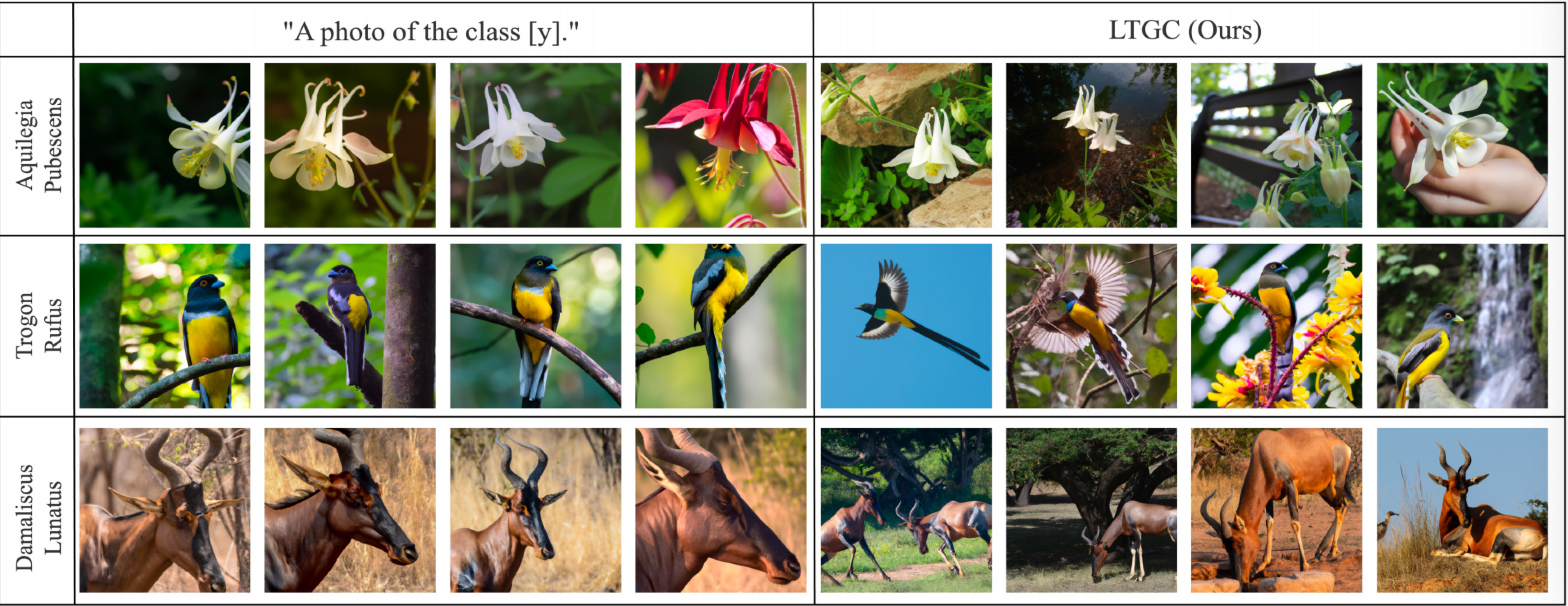

The visualization of generated images: The template ”A photo of the class [y]” and LTGC

Each row represents a different class. The four images on the left are generated using the simple template ”A photo of the class [y],” which results in images with uniform poses and plain backgrounds. The four images on the right are from the proposed LTGC and demonstrate the diversity of classes.

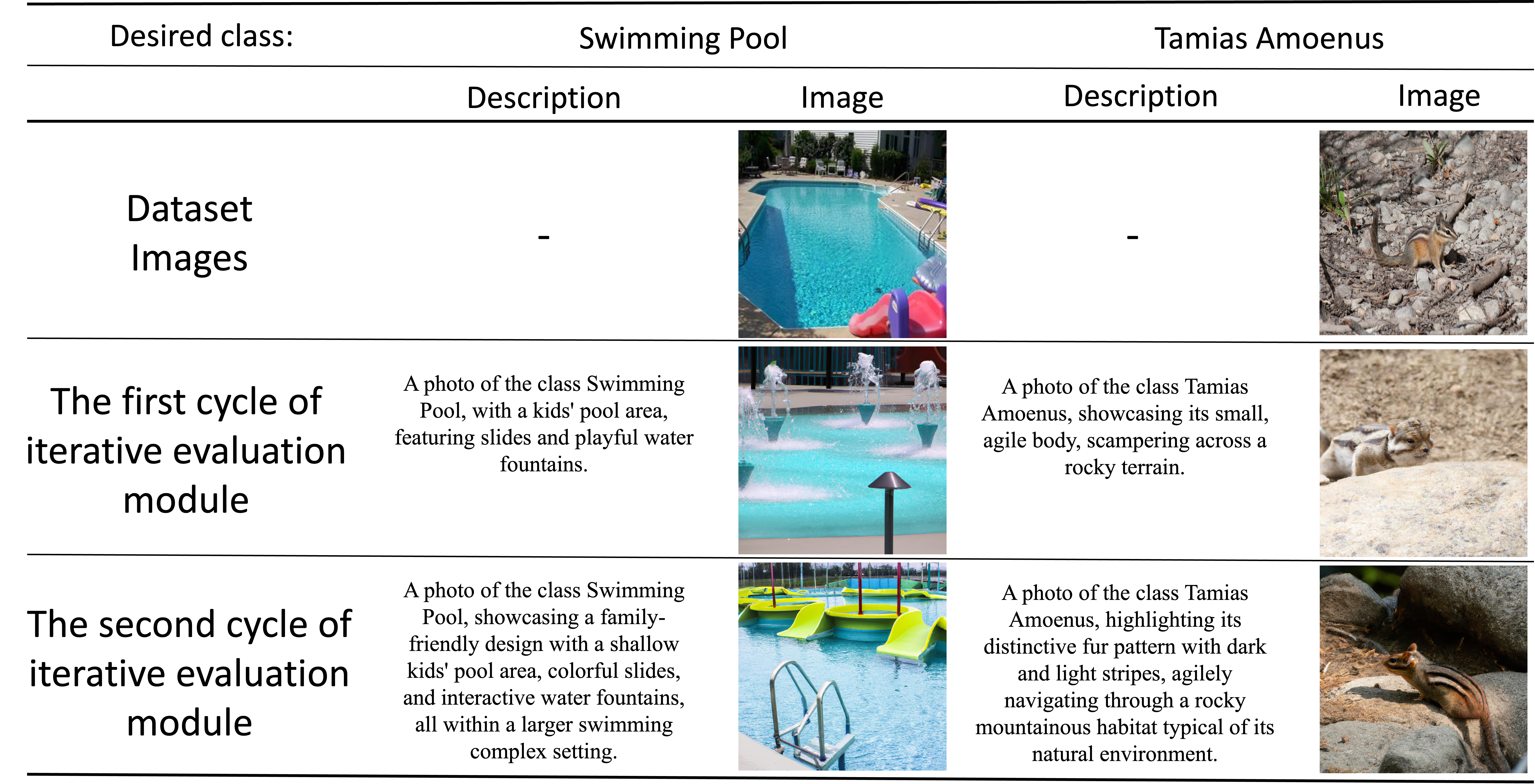

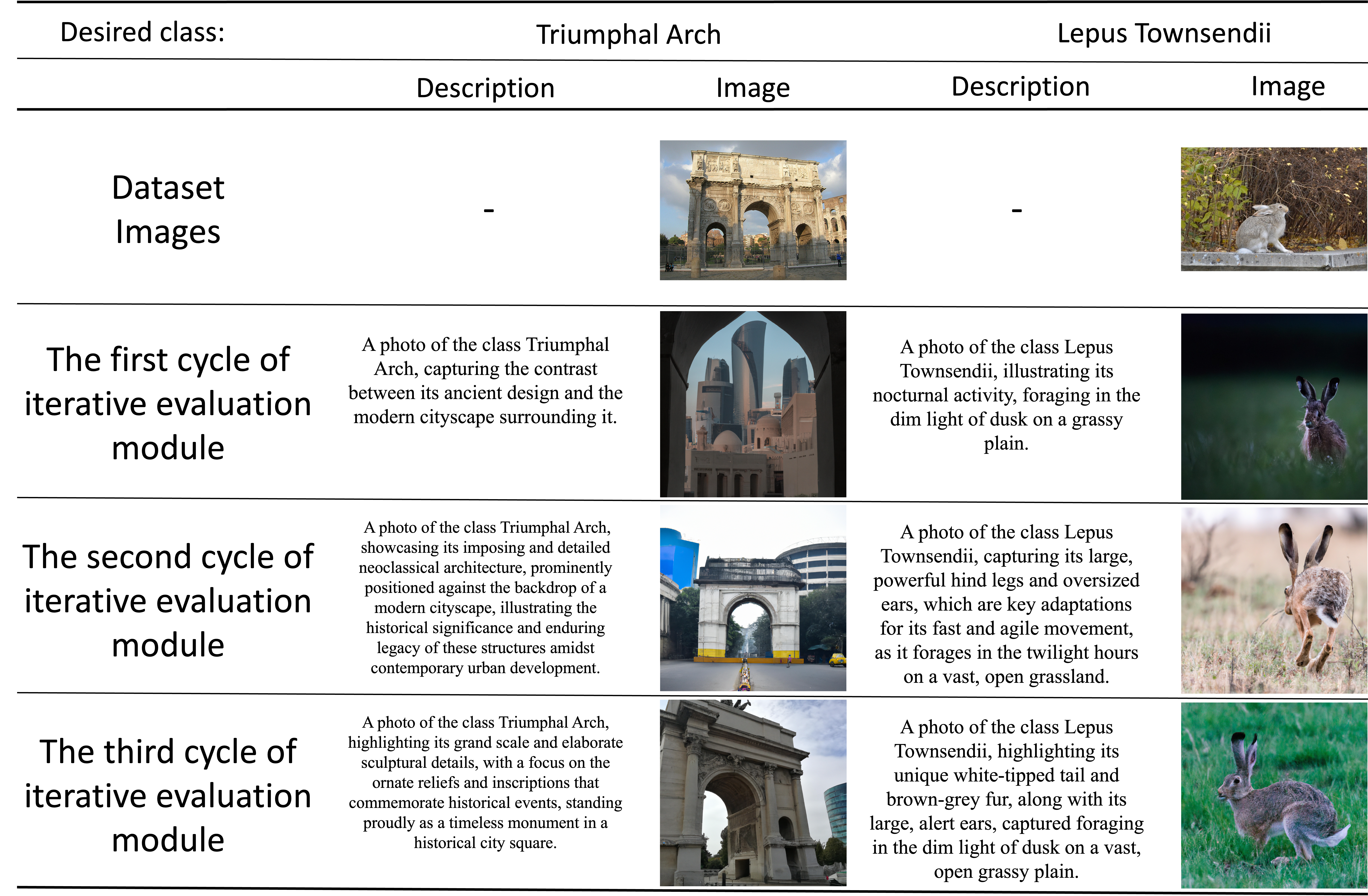

The visualization about the iterative evaluation module

The generated images for Tamias Amoenus and Lepus Townsendii do not align most closely with their intended classes until the third cycle, whereas the images for Swimming Pool and Triumphal Arch achieve their closest alignment with the intended classes in the second cycle. The classes of Tamias Amoenus and Lepus Townsendii are derived from the iNaturalist dataset, while Swimming Pool and Triumphal Arch are from the Place-LT and ImageNet-LT datasets, respectively.

Citation

@article{zhao2024ltgc,

title={LTGC: Long-tail Recognition via Leveraging LLMs-driven Generated Content},

author={Zhao, Qihao and Dai, Yalun and Li, Hao and Hu, Wei and Zhang, Fan and Liu, Jun},

journal={arXiv preprint arXiv:2403.05854},

year={2024}

}

Acknowledgments

The website style was inspired from DreamFusion and TCD.